Diving into Multi-Core CPUs

Multi-core CPUs are integrated circuits that has two or more processor cores attached for enhanced performance and reduced power consumption. These processors also enable more efficient simultaneous processing of multiple tasks, such as with parallel processing and multithreading.

How Does it Work?

- The motherboard and the operating system need to recognise the processor that there are multiple cores.

- An older OS with one core might not work well if it were to be installed on a multi-core system.

- Windows 95 doesn’t support hyper-threading or multiple cores, so if it were to be installed on a multi-core system, then it won’t utilize the hardware, i.e. everything would be slower than single-core processor.

- In a single core system, the operating system then tells the motherboard that a process needs to be done- then the motherboard instructs the processor.

- In a multi-core, the operating system can tell the processor to do multiple things at once where data is moved from hard drive/ RAM to the processor through the motherboard.

As compared to a single-core processor, a dual-core processor usually is twice as powerful in ideal circumstances. In actuality, performance gains of around 50% are expected: a dual-core CPU is roughly 1.5 times as powerful as a single-core processor.

As single-core processors hit their physical limits of complexity and speed, multi-core computing is becoming more popular. In modern times, the majority of systems are multi-core. Many-core or massively multi-core systems refer to systems with a huge number of CPU cores, such as tens or hundreds.

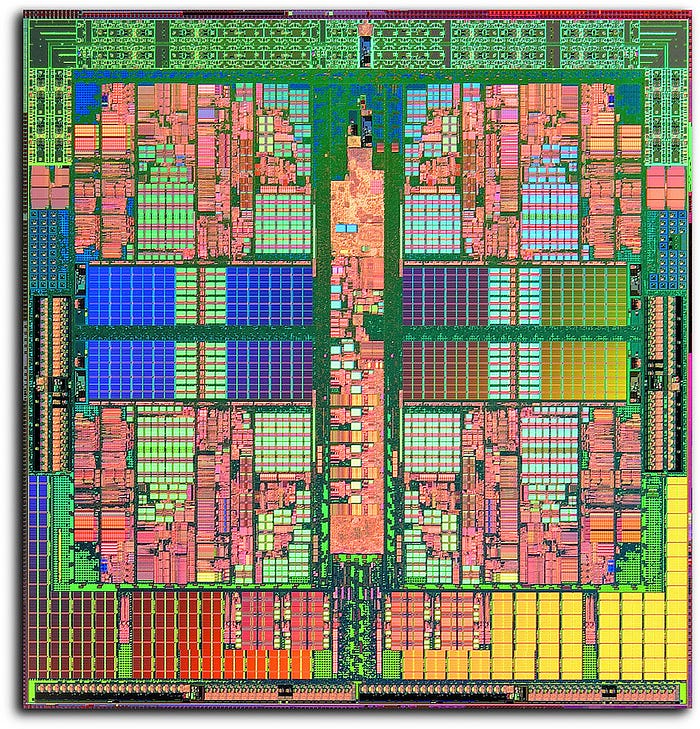

The architecture of Multicore Processor

A multi-core processor’s design enables communication between all available cores, and they divide and assign all processing duties appropriately.

The processed data from each core is transmitted back to the computer’s main board (Motherboard) via a single common gateway once all of the processing operations have been finished.

This method beats a single-core CPU in terms of total performance.

DIFFERENT TYPES OF MULTI-CORE Processors

- A number of cores. Different multicore processors often have different numbers of cores. For example, a quad-core processor has four cores. The number of cores is usually a power of two.

- A number of core types.

- Homogeneous (symmetric) cores. All of the cores in a homogeneous multicore processor are of the same type; typically the core processing units are general-purpose central processing units that run a single multicore operating system.

- Heterogeneous (asymmetric) cores. Heterogeneous multicore processors have a mix of core types that often run different operating systems and include graphics processing units.

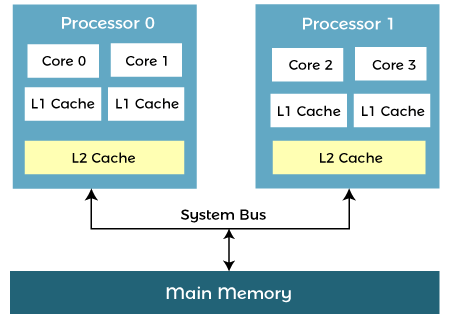

- Number and level of caches. Multicore processors vary in terms of their instruction and data caches, which are relatively small and fast pools of local memory.

- How cores are interconnected. Multicore processors also vary in terms of their bus architectures.

- Isolation. The amount, typically minimal, of in-chip support for the spatial and temporal isolation of cores:

- Physical isolation ensures that different cores cannot access the same physical hardware (e.g., memory locations such as caches and RAM).

- Temporal isolation ensures that the execution of software on one core does not impact the temporal behavior of software running on another core.

Advantages of Multi-Core Processor

Performance:

It can do more work as compared to a single-core processor. The spacing between the cores of an integrated circuit, allows for faster clock rates.

Reliability:

The software is always assigned to different cores. When one piece of software fails, the others remain unaffected. Whenever a defect arises, it only affects only one core. Hence, they are able to resist faults.

Software Interactions:

Even if the software is running on multiple cores, it will communicate with one another. Spatial and temporal isolation is a process that a multi-core process goes through. Core threads are never delayed as a result of these processes.

Multitasking:

An operating system can use a multi-core CPU to run two or more processes at the same time, even if many programs may be executed at the same time. A photoshop application, for example, can be used to perform two jobs at once.

Obsolescence Avoidance:

Architects can avoid technology obsolescence and increase maintainability by using multicore CPUs. Chipmakers are using the most recent technological advancements in their multicore CPUs. Single-core chips are becoming increasingly difficult to come by as the number of cores increases.

Isolation:

Multicore processors may increase (but do not guarantee) geographical and temporal isolation when compared to single-core systems. Software on one core is less likely to impact software on the other if both cores are executing on the same single-core.

Benefits of Multicore Processor

- When compared to single-core processors, a multicore processor has the potential of doing more tasks.

- Low energy consumption when doing many activities at once.

- Data takes less time to reach its destination since both cores are integrated on a single chip.

- With the use of a small circuit, the speed can be increased.

- Detecting infections with anti-virus software while playing a game is an example of multitasking.

- With the use of low frequency, it can accomplish numerous tasks at the same time.

- In comparison to a single-core processor, it is capable of processing large amounts of data.

In modern times, multicore processors are found in the majority of devices such as tablets, desktops, laptops, smartphones and gaming systems.

The two core options provided demonstrate how a processor’s model does not tell the entire story regarding performance. As compared to a dual-core i5, the performance of a quad-core i5 is substantially superior, and the price of the computer will reflect this. All of the current laptop models for the i5 model are dual-core, whereas all of the desktop models are quad-core as of this writing. Because laptop versions are dual-core rather than quad-core, an i5 in a laptop will have poorer performance than an i5 on a desktop. The dual-core type is better suited to portable laptops that require longer battery life and consumes less power, but a desktop uses a CPU that uses more power, such as the quad-core model, as it does not require battery life. Some applications of the multicore processor are as follows:

What are multicore Processors used for?

- Games with high graphics, such as Overwatch and Star Wars Battlefront, as well as 3D games.

- The multicore processor is more appropriate in Adobe Premiere, Adobe Photoshop, iMovie, and other video editing software.

- Solidworks with computer-aided design (CAD).

- High network traffic and database servers.

- Industrial robots, for example, are embedded systems.

Brief History

When the first chip-based processors were manufactured, the companies making these chips could only fit one processor on a single chip. As the chip-making technology improved, it became possible for chip makers to make chips with more circuits, and eventually, the manufacturing technology reached the point where chip makers could manufacture chips with more than one processor and create multi-core chips.

Kunle Olukotun, a Stanford Electrical Engineering professor, and his students designed the first multi-core chip in 1998. In 2001, IBM introduced the world’s first multicore processor, a VLSI (very-large-scale integration) chip with two 64-bit microprocessors comprising more than 170 million transistors.

This breakthrough design in architecture and semiconductor engineering allowed these two processors to work together at very high bandwidth with large on-chip memories, and with high-speed busses and input/output channels.

Four of these new microprocessors working together as a powerful 8-way module established a new industry standard and produced a then-record clock speed of 1.3 gigahertz.

Why “more” cores and what does that truly mean?

Processor cores are simply units that are a part of any computer’s CPU. Their function is to receive instructions and carry out computing operations, where information is processed and stored temporarily in the RAM.

In the 1990s the performance of a microprocessor went hand in hand with the microprocessor frequency , however with rapid improvements in the chip architecture the increase in performance as a result of increased frequency which itself flattened overtime, started becoming obsolete when it came to uni-core processors. The physical size of chips decreased while the number of transistors per chip increased; clock speeds increased which boosted the heat dissipation across the chip to dangerous levels.

Multi-core processors were introduced to tackle the issues prevalent in uni-core systems such as: power consumption, temperature dissipation, frequency etc. multi core processors ran at slower frequencies but they have proven to be the better option in contrast to uni-core processors courtesy of the simple logic: “Two heads are better than one”

To put it in simple terms the speed at which a computer runs programs can be said to be proportional to the number of cores present. A two — core processor is called dual core , a four core processor is called quad core and so on. But it’s not as simple as this, inevitably there are certain factors which affect the ability of a multi-core processor to speed up processes, such as its innate clock speed and simply the ability of a program to capitalize on the multicore processors capabilities. If we take a look at the speed of a processor lets understand that Processors are electronic circuits that perform mathematical calculations which take place at a fraction of a second. The time it takes a processor to finish a calculation is a cycle. The more cycles per second, the faster the processor can do its calculations. Most processors today are measured in gigahertz or a billion cycles per second. A 2 gigahertz processor runs 2 billion cycles per second, similarly one that’s 2.3 gigahertz runs billion cycles per second. A dual-core processor running at 2 gigahertz can run a total of 4 billion cycles, i.e. 2 billion for each processor.

Applications

The wide potential of multi-core processors (MCPs) stems from their ability to perform multiple tasks in parallel. This includes overall tasks such as pipelining many chunks of data simultaneously for high system throughput or enabling more complex control algorithms. Any application that can be divided into parallel pieces is suitable for multi-core processors.

Multi-core processors are widely implemented across many application domains, including general-purpose, embedded, network, digital signal processing (DSP), and graphics (GPU). Core count goes up to even dozens, and for specialized chips over 10,000, and in supercomputers (i.e. clusters of chips) the count can go over 10 million.

Some examples include high graphic games like as COD, Overwatch, and other similar 3D games, Computer-aided design (CAD) and SolidWorks, Multimedia applications, Video editing software like as Adobe Photoshop, Adobe Premiere and iMovie, Scientific tools like as MATLAB, Embedded systems like industrial robots, Computer utility like as Excel, Database servers and high network traffic.

In addition, embedded software is typically developed for a specific hardware release, making issues of software portability, legacy code or supporting independent developers less critical than is the case for PC or enterprise computing. As a result, it is easier for developers to adopt new technologies and as a result there is a greater variety of multi-core processing architectures and suppliers.

The Need and Current Scenario

There once was a race between Intel and AMD to see which company could build the first 1 GHz processor. Back in 2000, both companies were desperately trying to out-clock the other. The competition was so fierce that both companies claimed victory: AMD with its Athlon CPU and Intel with its Pentium III chip.

The competition didn’t stop at 1 GHz, as both companies then raced on to be the first to hit 2 and then 3 GHz. Around 2004, something odd happened: while the number of transistors on the CPU continued to increase, processor clock speeds began to flatten. The performance continued to improve, but what was driving the interest in cores over clock speed?

A] Multi-core CPUs were introduced:

The race between Intel and AMD continued, but the game had changed from clock speeds to multi-core chips. Around this time everyone traded in their single core Intel Pentium III for dual-core AMD chips running at a slower clock speed. Overall stability of the systems increased along with performance, but only a few programs existed that could take advantage of more than a single core.

Even today, at consumer level, only a few games and a number of post-production tools such as Adobe Premiere are able to take advantage of today’s newest consumer-grade quad and hexa-core CPUs. Others merely extract faster speed and don’t exploit the full potential of multi-core processors.

B] Virtualization

Virtualization is another technology helping to obtain the promise of MCPs. This is a methodology that allows running multiple operating environments — including a real-time and a general-purpose operating system — on the same hardware device. Virtualization works through the abstraction of associated processing cores, memory, and support devices. Automation system developers increasingly use this extra piece of software in their MCP-based controllers.

Memory management is critical when managing VMs, and is usually the first resource to become constrained. Using multi-core CPUs provides an increase in memory channels, allowing for large blocks of data to be processed and analysed. Allowing the processor to access this data from memory instead of the hard drive results in much better performance.

C] High-Performance Computing

High-performance computing (HPC) is the ability to process data and perform complex calculations at high speeds. To put it into perspective, a laptop or desktop with a 3 GHz processor can perform around 3 billion calculations per second. While that is much faster than any human can achieve, it pales in comparison to HPC solutions that can perform quadrillions of calculations per second.

One of the best-known types of HPC solutions is the supercomputer. A supercomputer contains thousands of compute nodes that work together to complete one or more tasks. This is called parallel processing. It’s similar to having thousands of PCs networked together, combining compute power to complete tasks faster.

HPC can also be very computational and memory intensive. Some of the most elaborate and expensive servers built at Puget Systems are used for HPC, and it’s not uncommon to find servers running AMD quad-Opteron or Intel quad-Xeon processors with support for up to 64 CPUs cores on a single server. HPCs are used in almost every field, right from Biological Research to Entertainment Industries.

D] Databases and the Cloud

Databases are tasked with running many tasks simultaneously. The more processor cores you have the more tasks you can run. Multi-core processors also allow multiple databases to be consolidated onto a single server. Again, the increased memory bandwidth is the primary reason this is possible.

Most database admins will say there’s no such thing as too much memory, and the latest processors from AMD and Intel allow up to 1 TB of memory on a single workstation-class motherboard.

Cloud environments are also transaction heavy, and multi-core processors allow a company to quickly scale up the number of cores during peak computing times. Energy efficiency is also important to cloud environments. Cores can be turned off or on as needed to optimize workloads and reduce energy.

To summarize, this blog has elaborated upon relevant knowledge and understanding pertaining to Multi-core-processors that one may require. We studied the need to invest time in MCPs and the widely used applications of it in different industries. We’ve explained how Processors have evolved over the years in terms of technology to create better designs and applications.

CPU performance is increasing rapidly day by day. The number of cores on the chip increases at each release of a new generation of a CPU. Modern electronic technology is getting more and more powerful accelerating the paradigm shift and creating a new world all together. Making the most out of this improving technology and putting it to the right use is our responsibility as consumers and as human beings.

What is Hyper-Threading?

Hyper-threading was Intel’s first effort to bring parallel computation to end user’s PCs. It was first used on desktop CPUs with the Pentium 4 in 2002.

The Pentium 4’s at that time only featured just a single CPU core. Therefore, it only performs a single task and fails to perform any type of multiple operations.

A single CPU with hyper-threading appears as two logical CPUs for an operating system. In this case, the CPU is single, but the OS considers two CPUs for each core, and CPU hardware has a single set of execution resources for every CPU core.

Therefore, CPU assumes as it has multiple cores than it does, and the operating system assumes two CPUs for each single CPU core.

Summary:

- A thread is a unit of execution on concurrent programming.

- Multithreading refers to the common task which runs multiple threads of execution within an operating system

- Today many modern CPUs support multithreading

- Hyper-threading was Intel’s first effort to bring parallel computation to end user’s PCs.

- A CPU core is the part of something central to its existence or character

- In, Operating System concurrency is defined as the ability of a system to run two or more programs in overlapping time phases.

- In parallel execution, the tasks to be performed by a process are broken down into sub-parts.

- The main issue of single-core processor is that in order to execute the tasks faster, you need to increase the clock time.

- Multicore resolves this issue by creating two cores or more on the same die to increase the processing power, and it also keeps clock speed at an efficient level.

- The biggest benefit of the multicore system is that it helps you to create more transistor per choice

- The CPU cores mean the actual hardware component whereas threads refer to the virtual component which manages the tasks.

Multicore Challenges

Having multiple cores on a single chip gives rise to some problems and challenges. Power and temperature management are two concerns that can increase exponentially with the addition of multiple cores. Memory/cache coherence is another challenge, since all designs discussed above have distributed L1 and in some cases L2 caches which must be coordinated. And finally, using a multicore processor to its full potential is another issue. If programmers don‟t write applications that take advantage of multiple cores there is no gain, and in some cases there is a loss of performance. Application need to be written so that different parts can be run concurrently (without any ties to another part of the application that is being run simultaneously). 4.1 Power and Temperature If two cores were placed on a single chip without any modification, the chip would, in theory, consume twice as much power and generate a large amount of heat. In the extreme case, if a processor overheats your computer may even combust. To account for this each design above runs the multiple cores at a lower frequency to reduce power consumption. To combat unnecessary power consumption many designs also incorporate a power control unit that has the authority to shut down unused cores or limit the amount of power. By powering off unused cores and using clock gating the amount of leakage in the chip is reduced. To lessen the heat generated by multiple cores on a single chip, the chip is architected so that the number of hot spots doesn‟t grow too large and the heat is spread out across the chip. As seen in Figure 7, the majority of the heat in the CELL processor is dissipated in the Power Processing Element and the rest is spread across the Synergistic Processing Elements. The CELL processor follows a common trend to build temperature monitoring into the system, with its one linear sensor and ten internal digital sensors. 4.2 Cache Coherence Cache coherence is a concern in a multicore environment because of distributed L1 and L2 cache. Since each core has its own cache, the copy of the data in that cache may not always be the most up-to-date version. For example, imagine a dual-core processor where each core brought a block of memory into its private cache. One core writes a value to a specific location; when the second core attempts to read that value from its cache it won‟t have the updated copy unless its cache entry is invalidated and a cache miss occurs. This cache miss forces the second core‟s cache entry to be updated. If this coherence policy wasn‟t in place garbage data would be read and invalid results would be produced, possibly crashing the program or the entire computer. In general there are two schemes for cache coherence, a snooping protocol and a directory-based protocol. The snooping protocol only works with a bus-based system, and uses a number of states to determine whether or not it needs to update cache entries and if it has control over writing to the block. The directory-based protocol can be used on an arbitrary network and is, therefore, scalable to many processors or cores, in contrast to snooping which isn‟t scalable. In this scheme a directory is used that holds information about which memory locations are being shared in multiple caches and which are used exclusively by one core‟s cache. The directory knows when a block needs to be updated or invalidated. Intel‟s Core 2 Duo tries to speed up cache coherence by being able to query the second core‟s L1 cache and the shared L2 cache simultaneously.

However, has to monitor cache coherence in both L1 and L2 caches. This is sped up using the HyperTransport connection, but still has more overhead than Intel‟s model. 4.3 Multithreading The last, and most important, issue is using multithreading or other parallel processing techniques to get the most performance out of the multicore processor. “With the possible exception of Java, there are no widely used commercial development languages with [multithreaded] extensions.” Rebuilding applications to be multithreaded means a complete rework by programmers in most cases. Programmers have to write applications with subroutines able to be run in different cores, meaning that data dependencies will have to be resolved or accounted for (e.g. latency in communication or using a shared cache). Applications should be balanced. If one core is being used much more than another, the programmer is not taking full advantage of the multicore system. Some companies have heard the call and designed new products with multicore capabilities; Microsoft and Apple‟s newest operating systems can run on up to 4 cores, for example.

Open Issues 5.1 Improved Memory System: with numerous cores on a single chip there is an enormous need for increased memory. 32-bit processors, such as the Pentium 4, can address up to 4GB of main memory. With cores now using 64-bit addresses the amount of addressable memory is almost infinite. An improved memory system is a necessity; more main memory and larger caches are needed for multithreaded multiprocessors.